✅ 이번 시간에는 마지막 CreateML 만드는 법과 코드리뷰를 함께 보도록 하자

우선 Xcode에서 CreateML을 눌러서 열어보도록 하자.

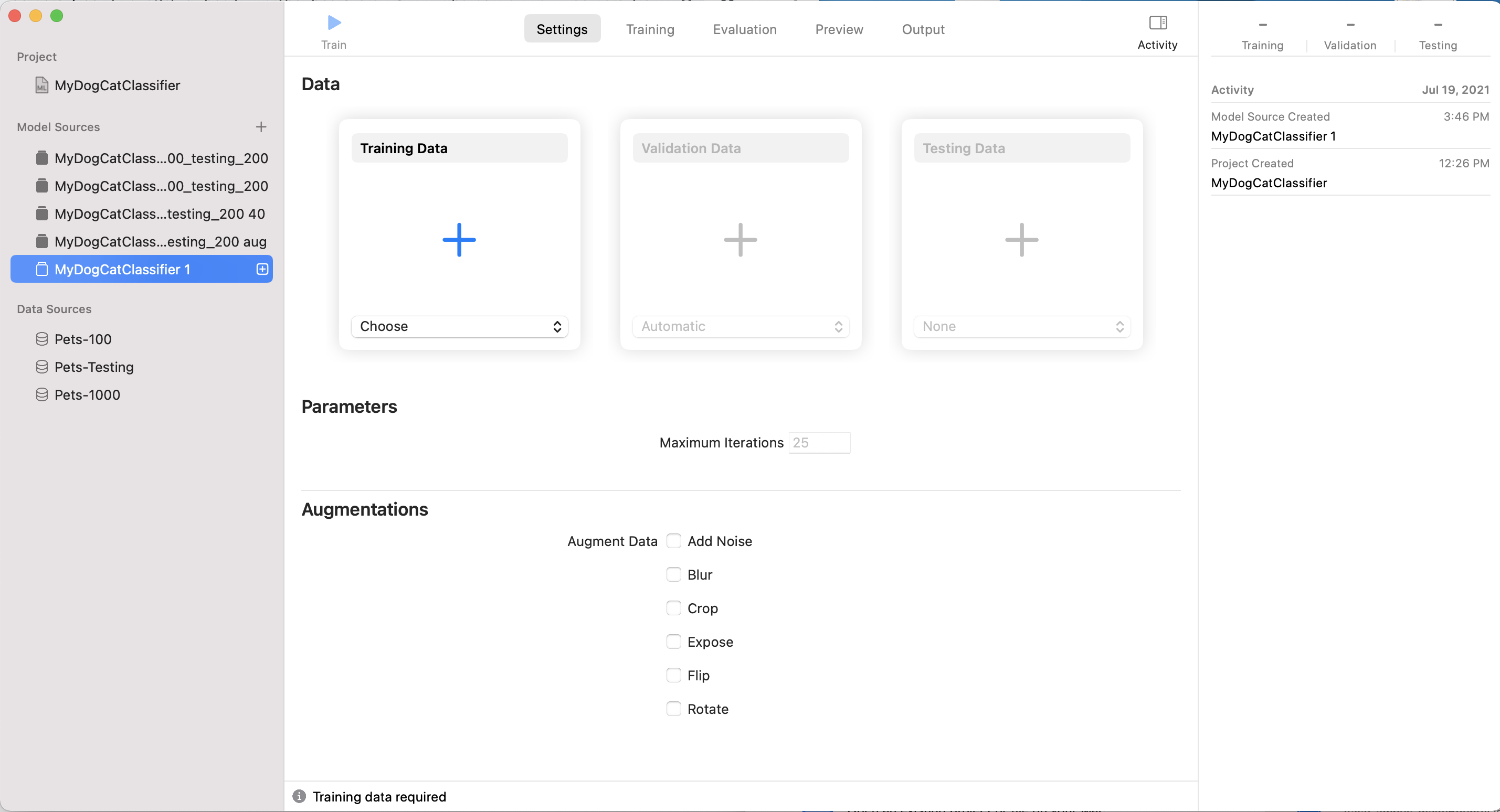

누르면 이렇게 열리게 되는데 내가 이미 모델 소스에 만들어 두었는데, 새로 열면 이런식으로 만들어지게 된다.

이후 트레이닝 데이터 부분에 데이터를 추가하면 된다.

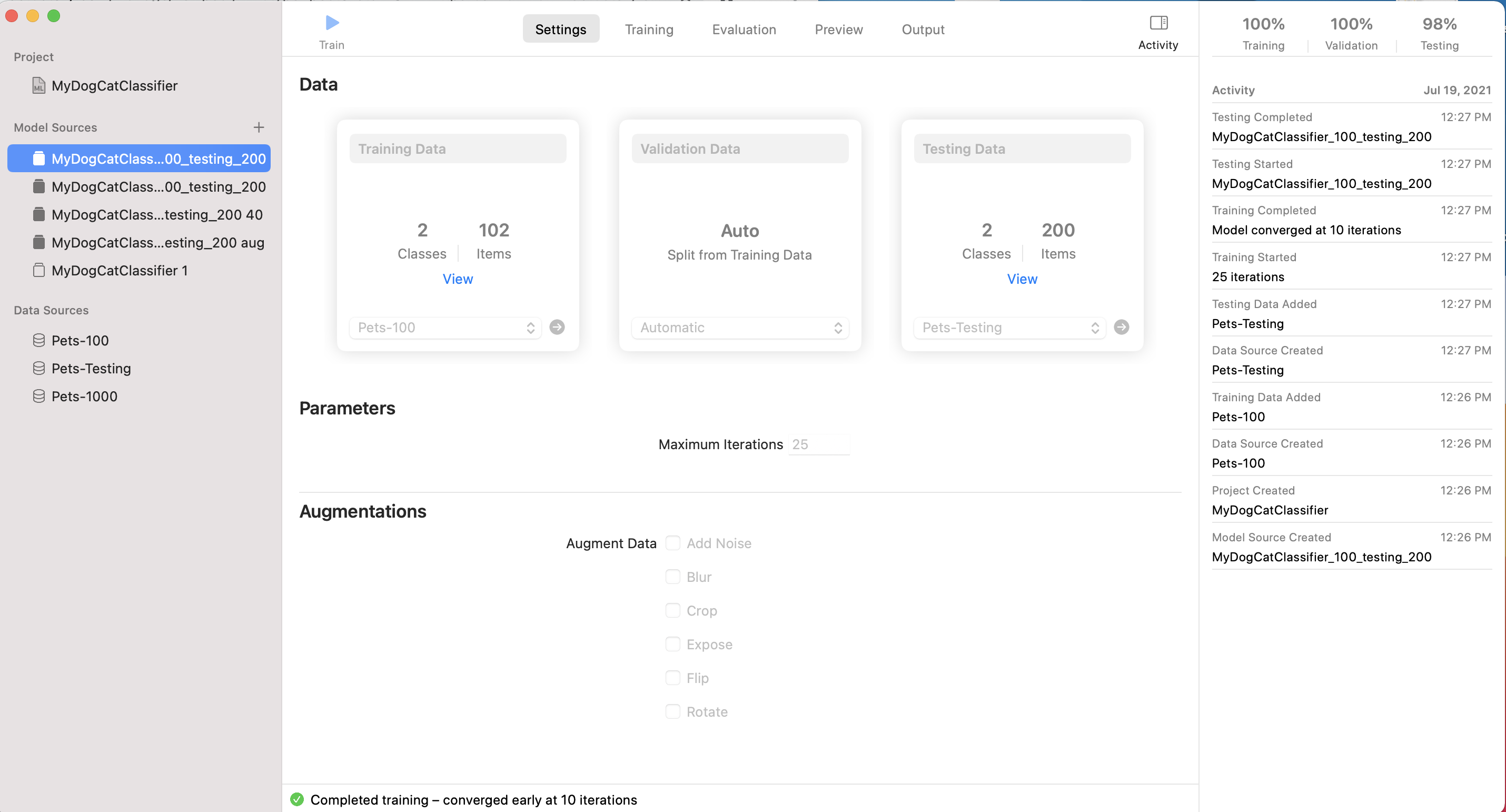

트레이닝 데이터에는 pets-100을 넣어주고, Testing Data쪽에는 pets-testing 데이터 넣어준다.

pets-prctice는 나중에 우리가 학습을 마친 후에 사용하는 것이다.

❗️pets-100 폴더를 넣어야지 하위의 dog만 넣으면 에러가 발생하니 주의하도록 한다.

트레이닝을 시키면 돼!!! 아주 간단하다.

✅ 파라미터 (Maximum Iteratoins) : 여러 번 반복하는 건데, 예를 들어서 구글의 경우에는 데이터의 수가 엄청 많아서 이 값이 작아도 괜찮다. 그러나 스타트업의 경우 데이터의 수가 엄청 많이 않고 한정적인데 한정적 데이터에서 최선의 결과를 뽑기 위해서 반복적으로 학습시킨다.

❗️주의할 사항으로는 오버피팅이라고 실험 환경에서 같은 데이터를 너무 많이 반복시키면 실제 데이터가 들어왔을 시, 올바르게 판정하지 못하는 실수를 범하는데, 오히려 반복을 많이 시켰음에도 정확도가 낮아지게 된다. 우리는 이것을 오버피팅이라고 부른다.

✅ Augmentations

Augment Data는 이미지에 노이즈를 주고나 회전 등 다양한 조건을 줘서 테스팅 시키는데, 우리는 이것을 통해 정확성을 더 높일 수도 있다.

결과가 이렇게 뜨는데, 여기 보여지는 케이스는 모두 100%라고 나오나 실제로는 완전히 100%가 아닐 수도 있다.

Precision : true라고 한 것중에 정말 true는 몇프로야?

Recall : 전체중에 프리시전

위키디피아 문서르 보면 아주 잘 나와있다!!

결국은 수치가 둘 다 높으면 좋다는 것

여러개의 클래스 파일을 만들어서 테스트 해보고 그 중에서 가장 밸류가 높은 것을 사용한다.

Roc curve(개념) : 이것도 구글에 치면 나오는데, 영역이 넓어야지 정확도가 높다.

CoreML에서 제공해주는 것에는 한계가 있기 때문에 AI 엔지니어는 다른 것들도 쓸 수 있다.

Turi Create : 애플 공식문서에서 제공해주는 툴이다.

tensorflow : 얘는 스위프트로변형해서 사용할 수 있다

Pytorch : 얘는 자체적으로 iOS를 지원해주는데, 이것도 좋다.

✅ 코드 리뷰!

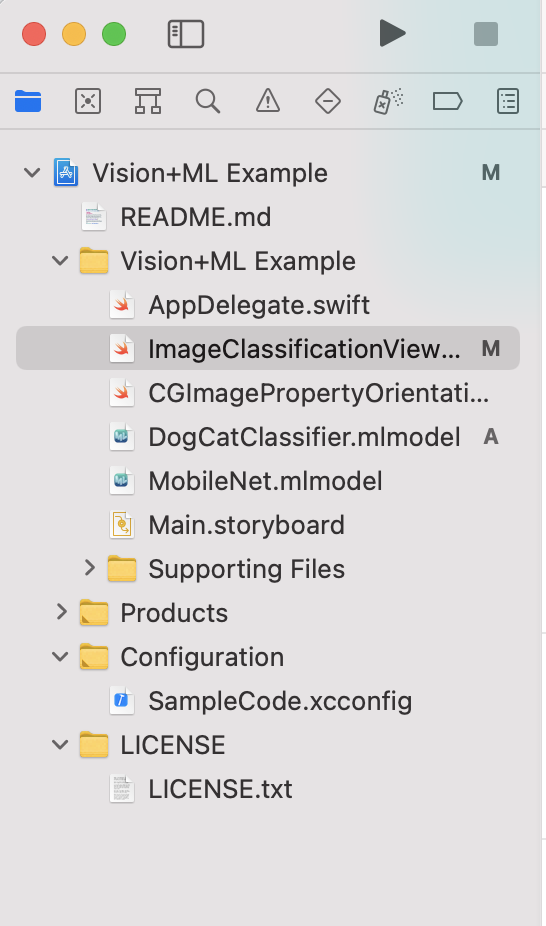

✅ 애플에서 제공해주는 파일인데, 이걸 갖고 실습할 예정이다.

DogCatClassifier는 우리가 만든 ml파일을 트레이닝을 마친 것을 넣는다고 생각하자.

✅ ImageClassificationView 를 한번 볼까?

/*

See LICENSE folder for this sample’s licensing information.

Abstract:

View controller for selecting images and applying Vision + Core ML processing.

*/

import UIKit

import CoreML

import Vision

import ImageIO

class ImageClassificationViewController: UIViewController {

// MARK: - IBOutlets

@IBOutlet weak var imageView: UIImageView!

@IBOutlet weak var cameraButton: UIBarButtonItem!

@IBOutlet weak var classificationLabel: UILabel!

// MARK: - Image Classification

/// - Tag: MLModelSetup

lazy var classificationRequest: VNCoreMLRequest = {

do {

/*

Use the Swift class `MobileNet` Core ML generates from the model.

To use a different Core ML classifier model, add it to the project

and replace `MobileNet` with that model's generated Swift class.

*/

let model = try VNCoreMLModel(for: DogCatClassifier().model) // 비전코어ML모델로 만들기

let request = VNCoreMLRequest(model: model, completionHandler: { [weak self] request, error in

self?.processClassifications(for: request, error: error) // 모델한테 이미지 불러온거 요청을 해야해

// 리퀘스트가 보내지고 나서 그에 해당하는 핸들로는 이렇게 한다. CoreML모델 셋업 해주기

})

request.imageCropAndScaleOption = .centerCrop // 머신모델 이미지보다 비율이 더 클수도 작을수도 있고 등 머신러닝 모델을 만들때 조건을 설정하는데, 이거는 크게 3가지가 있다.

// 센터크롭, 스케일 핏해서 안에 딱 맞게해서 판정, 필을 시켜서 꽉 채워서 판정

// 정답이 있긴 보다는 머신러닝 모델 만들때 사용한 조건을 사용하는데, 주로 센터크롭 많이 사용한다고 한다.

return request

} catch {

fatalError("Failed to load Vision ML model: \(error)")

}

}()

/// - Tag: PerformRequests

func updateClassifications(for image: UIImage) {

classificationLabel.text = "Classifying..."

let orientation = CGImagePropertyOrientation(image.imageOrientation)

guard let ciImage = CIImage(image: image) else { fatalError("Unable to create \(CIImage.self) from \(image).") }

DispatchQueue.global(qos: .userInitiated).async {

let handler = VNImageRequestHandler(ciImage: ciImage, orientation: orientation)

do {

try handler.perform([self.classificationRequest])

} catch {

/*

This handler catches general image processing errors. The `classificationRequest`'s

completion handler `processClassifications(_:error:)` catches errors specific

to processing that request.

*/

print("Failed to perform classification.\n\(error.localizedDescription)")

}

}

}

/// Updates the UI with the results of the classification.

/// - Tag: ProcessClassifications

// 요청할 때 불리는 함수 즉, 갤러리에서 사진 선택했을때, 그걸 분석해서 텍스트를 내보내는 것 까지가 여기서 하는 과정

// 수행되는 시간은 이미지가 선택했을 때라는 것을 잊지말기!! 그때 중요한게 뭐냐면 비전에 있는 프레임웤에 있는 리퀘스트 핸들러를 만들어서 이걸 만들고 실제로 수행을 시켜주는 녀석!

func processClassifications(for request: VNRequest, error: Error?) {

DispatchQueue.main.async {

guard let results = request.results else {

self.classificationLabel.text = "Unable to classify image.\n\(error!.localizedDescription)"

return

}

// The `results` will always be `VNClassificationObservation`s, as specified by the Core ML model in this project.

let classifications = results as! [VNClassificationObservation]

// 여기 아래가 실제 결과물이 있는 부분

// 우리는 클래스가 2개지만 MobileNet의 경우에는 클래스가 무려 1000개가 된다.

// ml파일에서 클래스에 대한 결과물을 가져와서 description을 쓴다.

if classifications.isEmpty {

self.classificationLabel.text = "Nothing recognized."

} else {

// Display top classifications ranked by confidence in the UI.

let topClassifications = classifications.prefix(2)

let descriptions = topClassifications.map { classification in

// Formats the classification for display; e.g. "(0.37) cliff, drop, drop-off".

return String(format: " (%.2f) %@", classification.confidence, classification.identifier) // 결과 안에 클래스와 정확도에 대한 정보가 있는데 그것을 우리는 스트링으로 만들어준 것이다.

}

self.classificationLabel.text = "Classification:\n" + descriptions.joined(separator: "\n")

}

}

}

// MARK: - Photo Actions

@IBAction func takePicture() {

// Show options for the source picker only if the camera is available.

guard UIImagePickerController.isSourceTypeAvailable(.camera) else {

presentPhotoPicker(sourceType: .photoLibrary)

return

}

let photoSourcePicker = UIAlertController()

let takePhoto = UIAlertAction(title: "Take Photo", style: .default) { [unowned self] _ in

self.presentPhotoPicker(sourceType: .camera)

}

let choosePhoto = UIAlertAction(title: "Choose Photo", style: .default) { [unowned self] _ in

self.presentPhotoPicker(sourceType: .photoLibrary)

}

photoSourcePicker.addAction(takePhoto)

photoSourcePicker.addAction(choosePhoto)

photoSourcePicker.addAction(UIAlertAction(title: "Cancel", style: .cancel, handler: nil))

present(photoSourcePicker, animated: true)

}

func presentPhotoPicker(sourceType: UIImagePickerControllerSourceType) {

let picker = UIImagePickerController()

picker.delegate = self

picker.sourceType = sourceType

present(picker, animated: true)

}

}

extension ImageClassificationViewController: UIImagePickerControllerDelegate, UINavigationControllerDelegate {

// MARK: - Handling Image Picker Selection

func imagePickerController(_ picker: UIImagePickerController, didFinishPickingMediaWithInfo info: [String: Any]) {

picker.dismiss(animated: true)

// We always expect `imagePickerController(:didFinishPickingMediaWithInfo:)` to supply the original image.

let image = info[UIImagePickerControllerOriginalImage] as! UIImage

imageView.image = image

updateClassifications(for: image)

}

}코드로 작성해 두었다!! 주석을 달아 두었으니 읽어보도록 한다.

'Archive > 패캠(올인원)' 카테고리의 다른 글

| ch19 🤖 CoreML (0) | 2021.07.19 |

|---|---|

| 📸 ch18 FullScreen 카메라 앱 코드리뷰 (0) | 2021.07.06 |

| 📸 ch 18 AVFoundation 카테고리 별로 탐구 (0) | 2021.07.05 |

| ch 18 공짜 계정으로 앱 폰에 설치하기 (0) | 2021.07.05 |

| 🎬 ch17 Netflix 확장앱 코드리뷰(firebase, kingfisher) + ch15 (0) | 2021.07.05 |